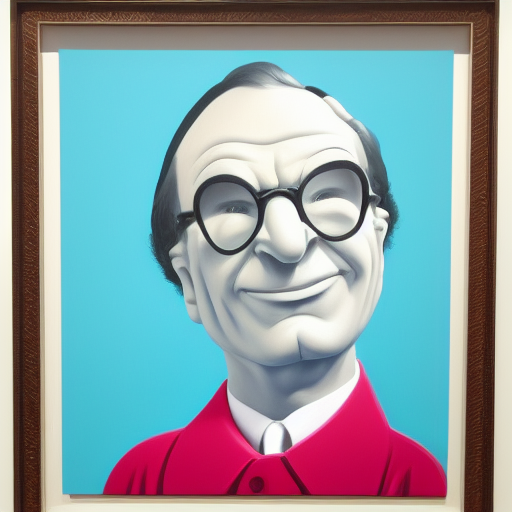

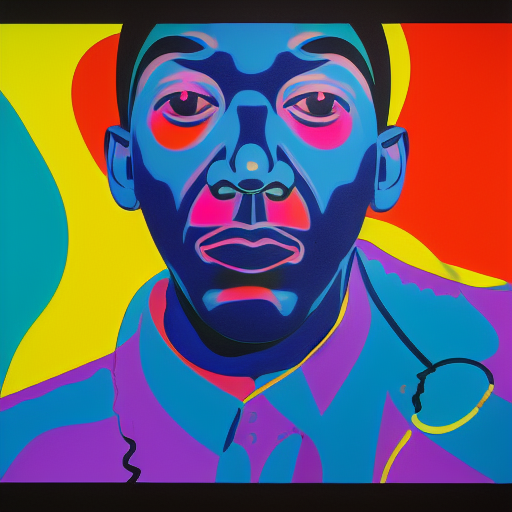

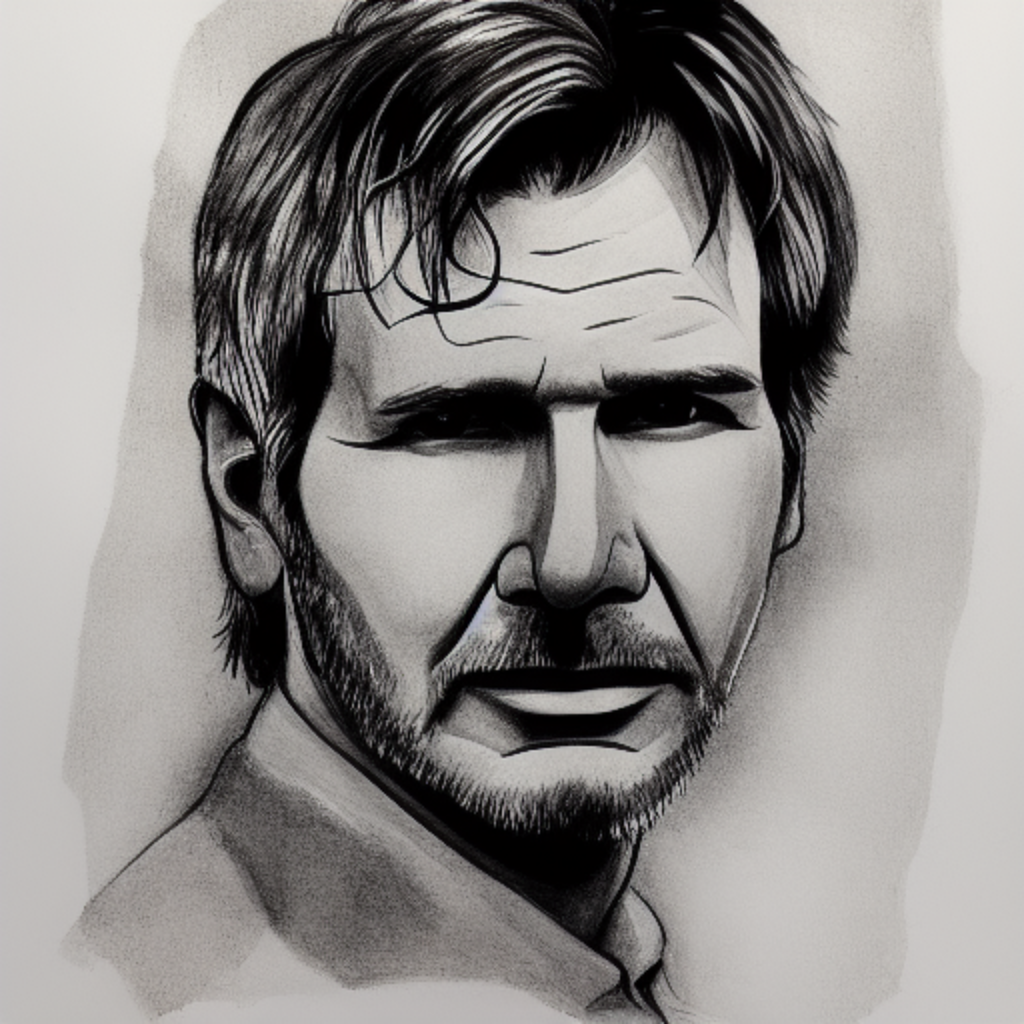

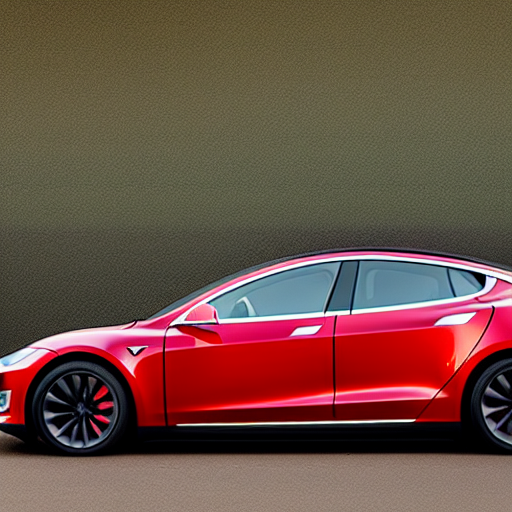

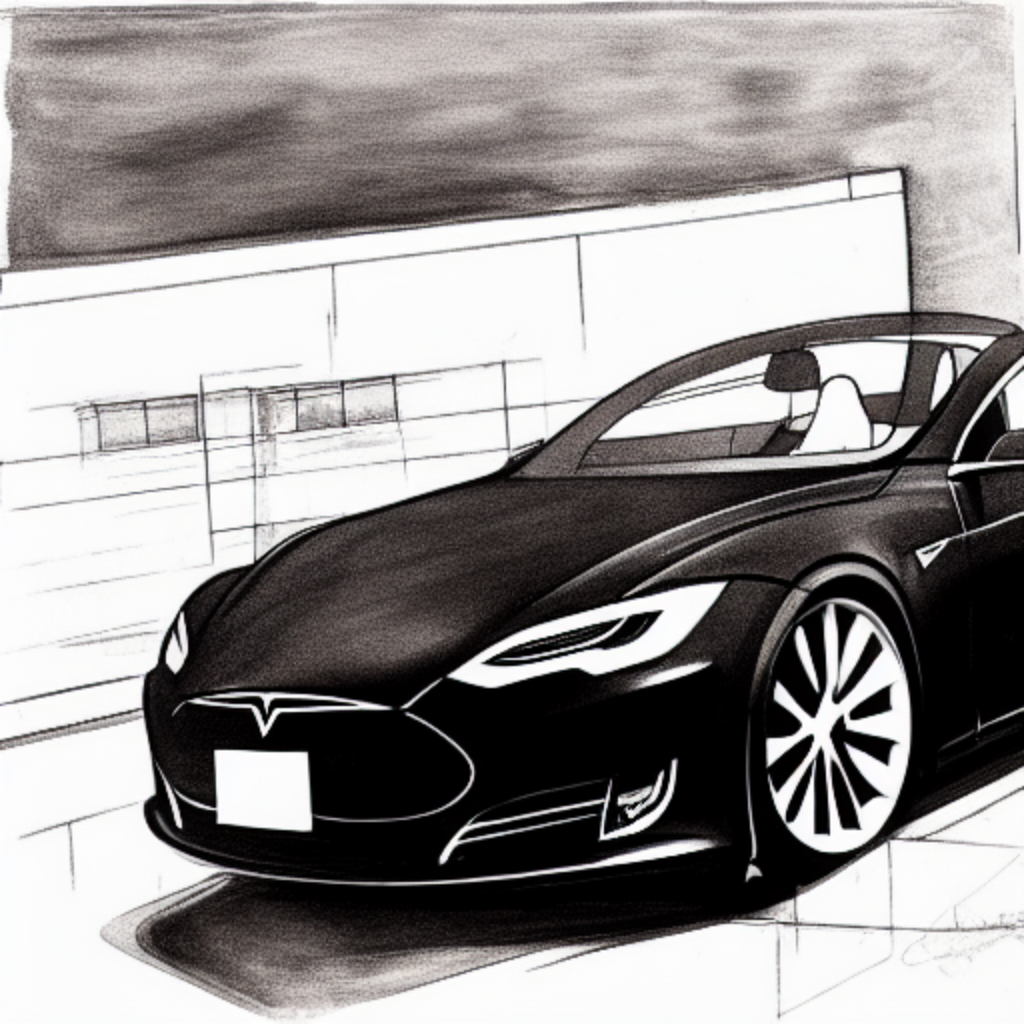

Who needs stock photos when you can create your own content with a few keywords when needed?

This is the level of disruption we’re experiencing now with DALL-E, Stable Diffusion and other machine learning models. Unlike OpenAI’s DALL-E, Stability.ai has released Stable Diffusion as an open source and it runs on regular computers without the need for heavy GPU units. You can test both with free online accounts.

What happens to artists when everybody has the tools to create new visual content based on extensive machine learning models?

We are already starting to see a flood of visual content since it’s so easy to generate it automatically or by manual iteration. This has created new job titles and demand for specialised skills such as prompt specialists & curators, digital art miners & historians among others.

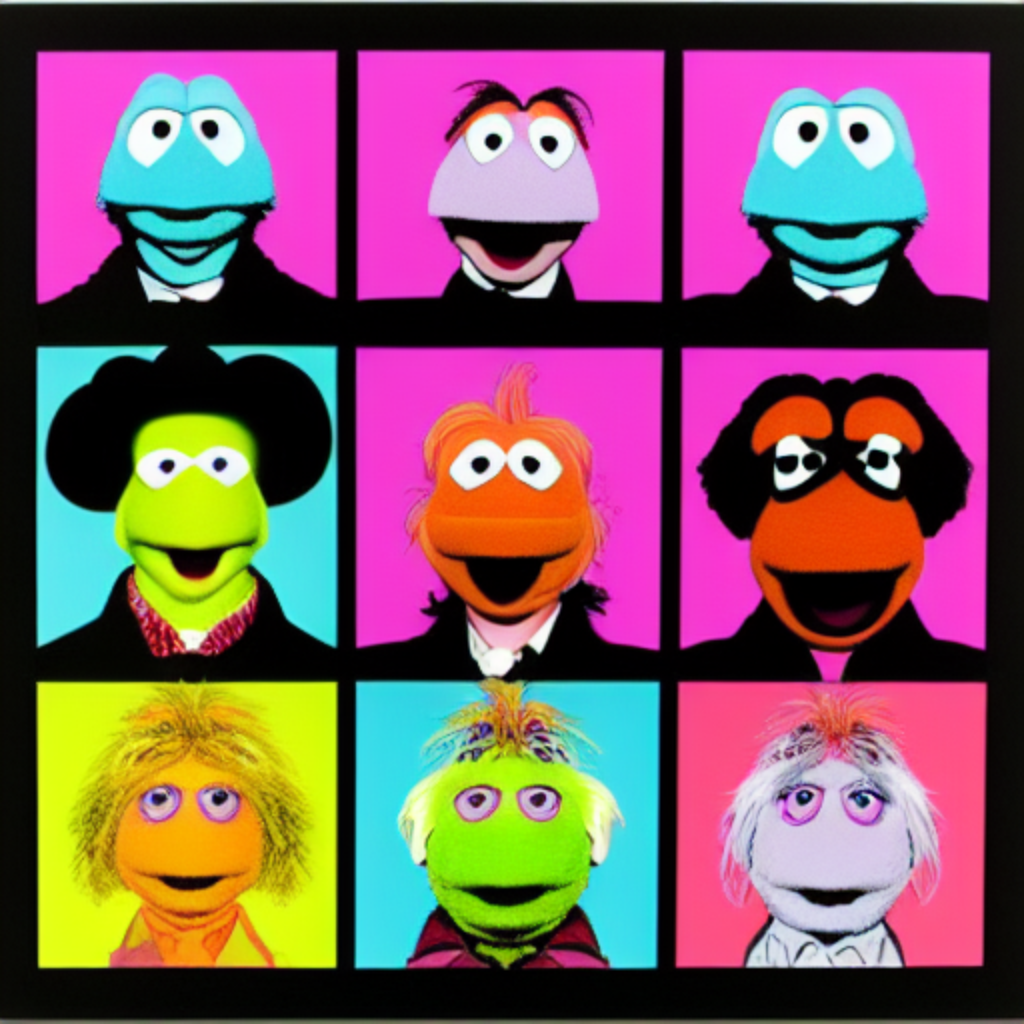

It did not take long to figure out that you can generate tons of NFTs and jpeg monkeys with these models. But who’s going to buy all that freshly minted visual content? Check yourself to get an idea of what’s out there already.

It’s not just visual content. The same disruption is coming to audio and video content as well.

Who should be worried – creatives, artists, everybody or nobody?

There have always been new tools coming to market when the technology has improved. This is not a new phenomenon. Photographs replaced commissioned portraits when cameras and their processes became more affordable. The old ways were not entirely going away but became more specialised and required more creative input to stay relevant.

Digital cameras unlocked the picture taking that was held back by the cost of developing films and the time delay involved but the massive scale-up happened only when mobile phones started to have good enough cameras.

Is the machine learning generated content creative or artistic?

This debate is ongoing and it’s fascinating to follow different points of view. Humans have always imitated, copied, modified, altered, improved and iterated anything and everything they have managed to get their hands on. Hip-Hop Evolution is a good case in point of how sampling became the core of the entire genre of music. Nobody creates anything in a vacuum. We stand on the shoulders of giants as the saying goes.

Machine learning models are giant toolboxes that help in this imitation and variation process. Are most of the outputs bad, tasteless and bulk? Absolutely. The same applies to your social media feed or any other content you’re consuming. We are given more choices. The content generation becomes cheaper in more formats than was available previously. Next in line will be video, audio, AR and VR.

Can machines replace artistic expression? No. Tools are just tools. AI does not understand the context. It can be deceivingly good at presenting different appearances of meaning but they are just hollow facades based on their models. Machines are good at specialised tasks. They cannot do something they are not built to do, unlike humans who can explain and solve novel situations and circumstances.

Art has never been about the tools or media used but the meaning and the context (artists have their own and the audience make their own). That’s the sole territory of humans. We are universal explainers and capable of creativity that no machine can achieve.

New tools enable new artistic expression. This can happen in the coding and machine learning phase or by using the tools. These methods allow more people to become artists where previously you needed countless hours of practice to hone your skills in creating images, music or other media. Now, you can focus more on the context and meaning if you choose to do so (with many others).

Does this change mean disruption for many current jobs? If you have been making stock photos or other content that can be generated with ML models it may be time to shift to new areas where your skills are more valuable such as AR and VR, or you need to step up your game to stick out of the crowd and become artisan creator with higher perceived value.

I have been experimenting with DALLE-E 2 and Stable Diffusion for a while. It’s an area that will provide insights into the next decades and how our world will change rapidly with visible differences.

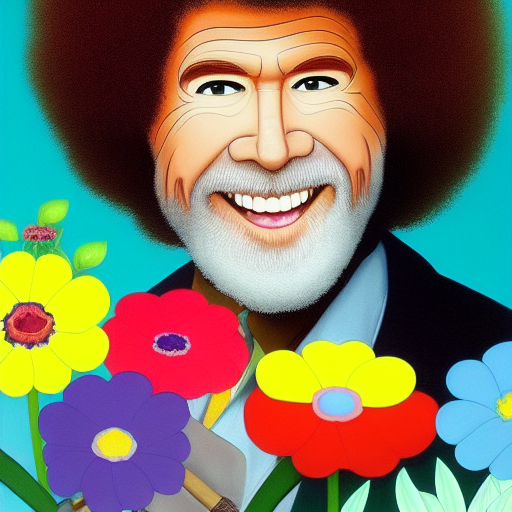

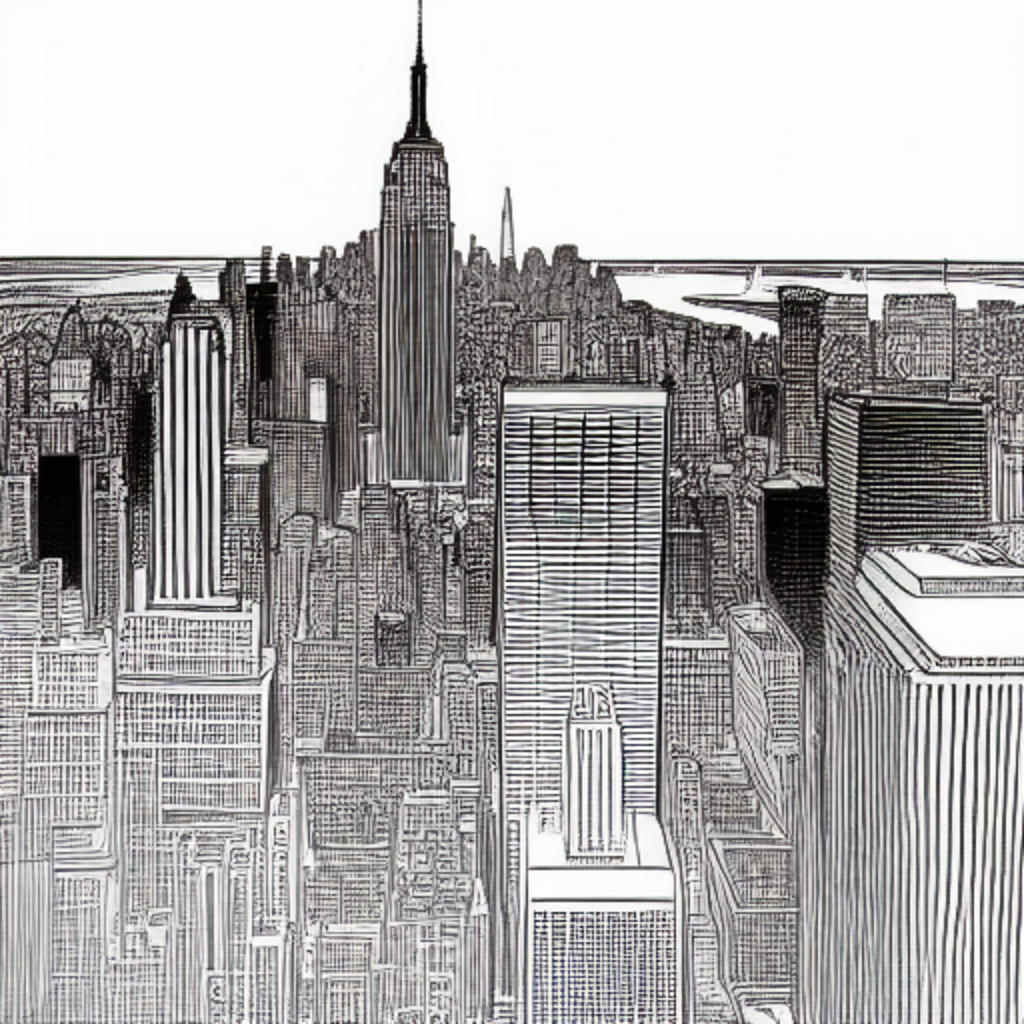

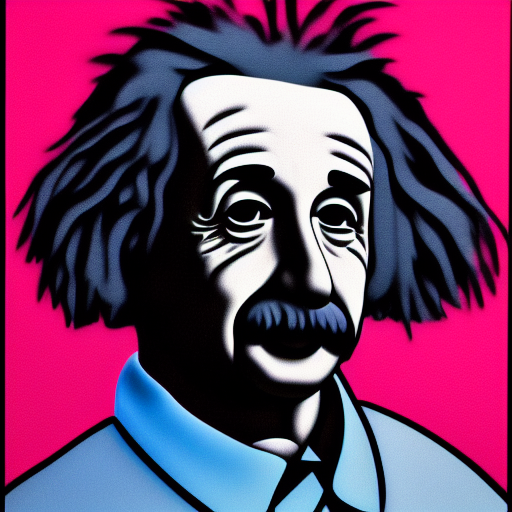

Here are some examples from this explorative journey for your inspiration and encouragement to start yours.